Recently, I’ve been involved in conducting code reviews for my team’s end-to-end test automation project, which utilizes Playwright technology. I dedicate about a couple of hours each day to this task, either by reviewing others’ code or by responding to feedback on my own pull requests.

I firmly believe that we as test automation engineers should approach test automation as any kind of software because test automation is software development. Software developers should have solid knowledge on tools and best practices like: coding and naming standards, configuration management, code review practices, modularization, abstraction, static analysis tools, SOLID and DRY principles, etc. A well-established code review process is one of the success points while working on the test automation projects. You might find a lot of best resources on how to conduct code review: code reviews best practices by Google, by GitLab and others. In this article, I would like to point out several aspects I pay attention to while reviewing test automation code in addition to standard guidelines.

Automate what can be automated!

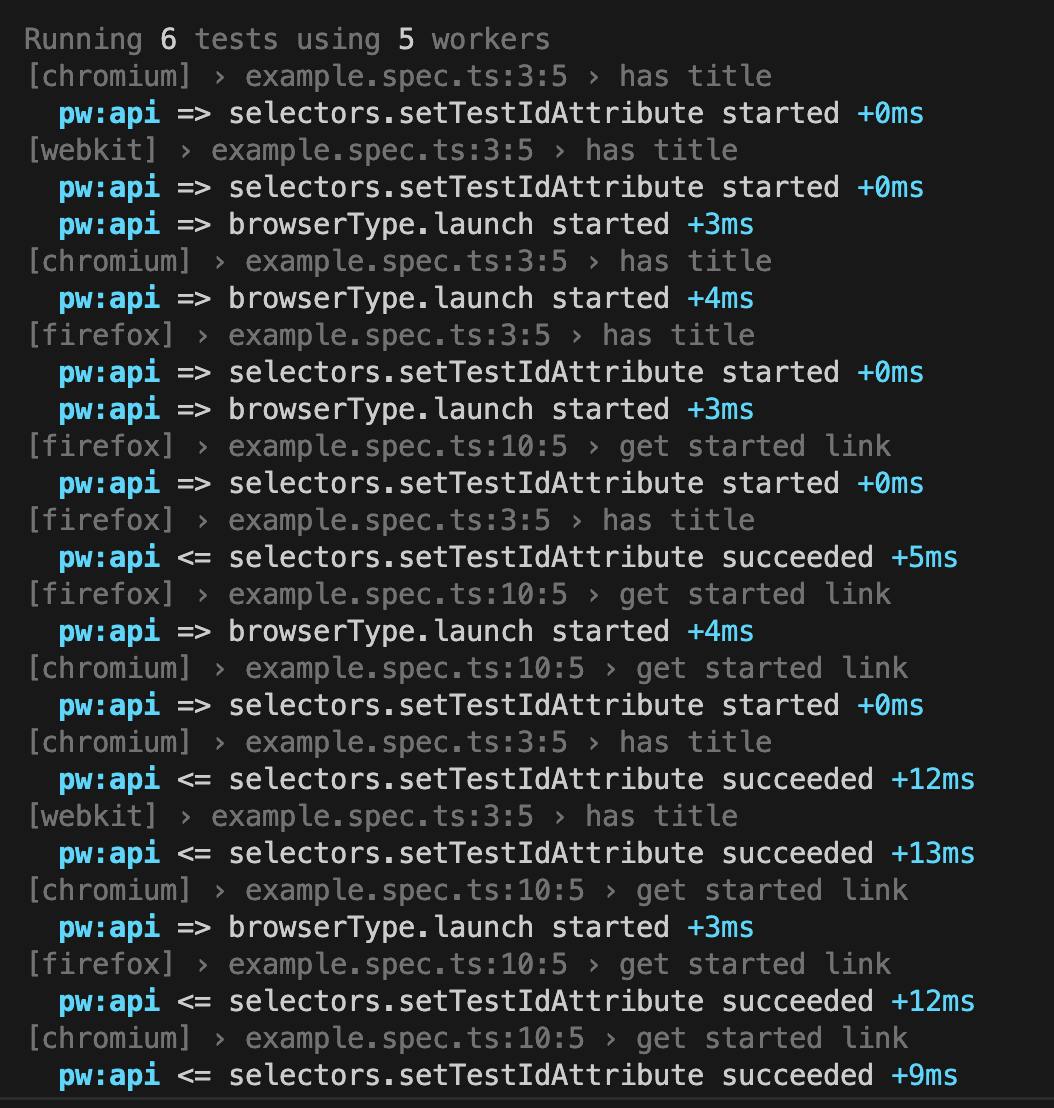

Make your life easier 🙂 Automation can significantly simplify managing run-time errors, stylistic issues, formatting challenges, and more. Numerous tools are available to assist with this. For a Playwright project using TypeScript, I recommend installing and configure the following:

- ESLint: This tool performs static analysis of your code to identify problems. ESLint integrates with most IDEs and can be implemented as part of your CI/CD pipeline.

- Prettier: A code formatter that is helpful in enforcing a consistent format across your codebase.

- Husky: Facilitates the easy implementation of Git hooks.

In this detailed guide by Butch Mayhew you can find all the information you need to install and configure these tools in your project.

Identify easy to spot issues first

First thing you have to look for is any preliminary checks required for the PR to be merged, like: merge conflicts, outdated branches, failed static analysis tools or formatter checks. Then you might briefly look for easy to spot poor coding practices and errors: naming convention, redundant debug lines (for example, console.log()), formatting, long or complex functions, unnecessary comments, typos and so on. Moreover, you might spot violation of agreed rules within the team, like test case id or description, etc.

Verify that each test should focus on a single aspect.

The general guideline is that tests should contain only one assertion, reflecting the primary objective of the test. For example, if you’re verifying that a button is correctly displayed and functional on the UI, the test should be limited to that specific check.

Here’s an example using Playwright for a TypeScript project:

import { test, expect } from '@playwright/test';

test('should display and enable the submit button', async ({ page }) => {

await page.goto('https://example.com');

const submitButton = page.locator('#submit-button');

await expect(submitButton).toBeVisible();

await expect(submitButton).toBeEnabled();

});

Additionally, name the test to reflect its purpose, capturing the intent rather than the implementation details.

Separation of concerns

Separation of concerns is a fundamental design principle that we might need to stick to. When structuring code with functions and methods, it’s crucial to determine the appropriate scope for each. Ideally, a function should do one thing and one thing only. Following this approach, you will achieve a distinct and manageable codebase.

In UI testing, the most popular approach for maintaining separation of concerns is the Page Object Pattern. This pattern separates the code that interacts with the DOM from the code that contains the test steps and assertions.

Proper separation of concerns within tests also means placing setup and teardown steps in separate functions or methods or beforeEach or afterEach steps. This practice makes it easier to understand the core validation of the test without being distracted by the preparatory steps. Importantly, setup and teardown functions should avoid assertions; instead, they should throw exceptions if errors occur. This approach ensures that the primary focus of the test remains on its intended verification.

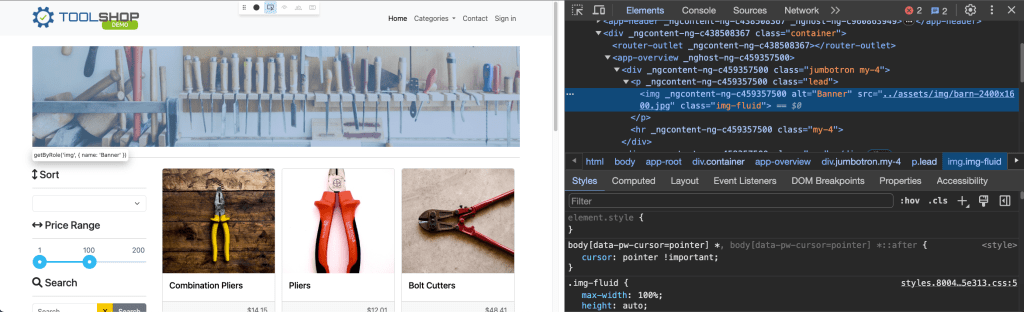

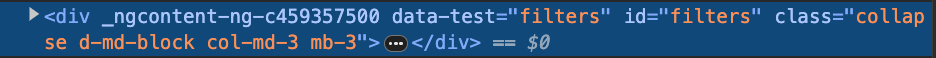

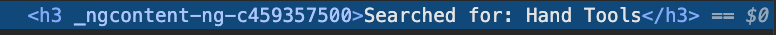

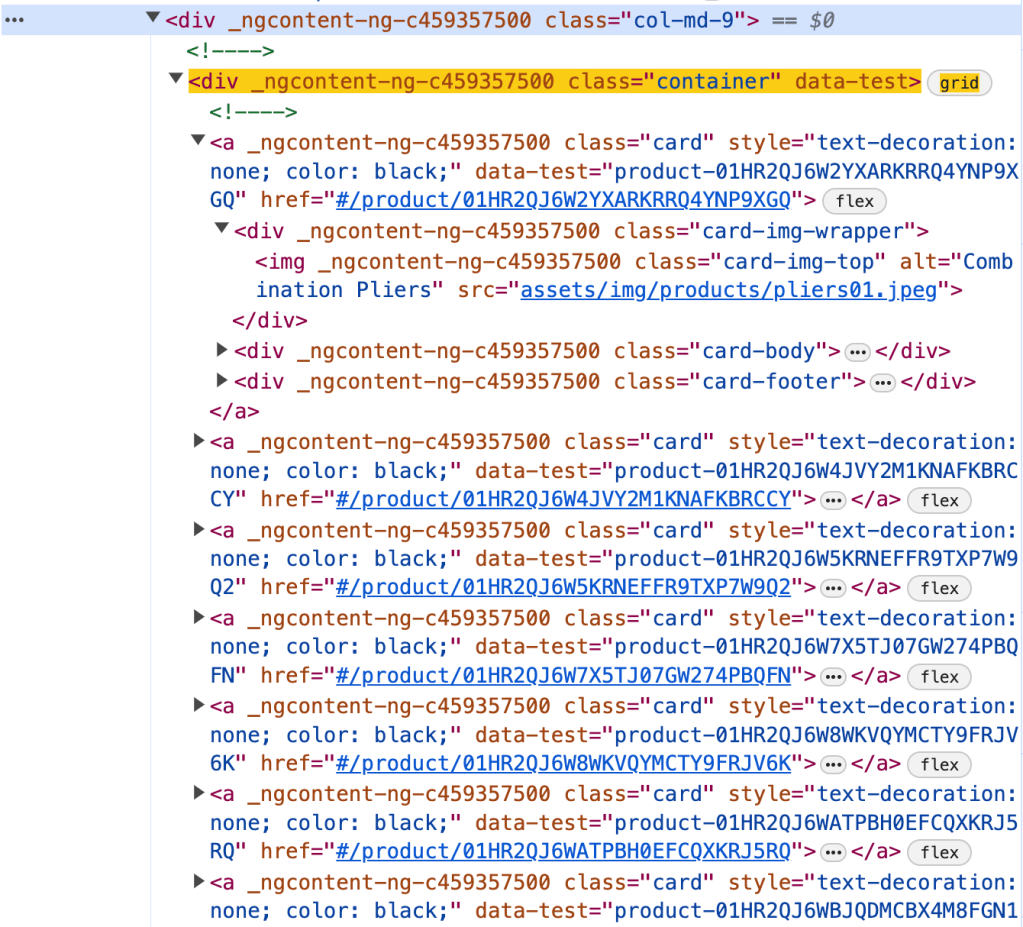

Is the locator / selector strategy solid?

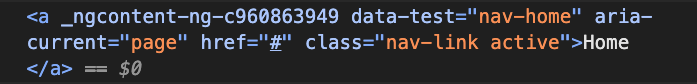

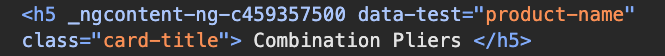

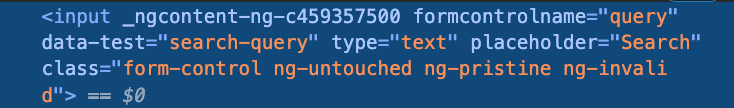

A solid locator/selector strategy is crucial for ensuring that your tests are stable and maintainable. This means using selectors that are resilient to changes in the UI and are as specific as necessary to avoid false positives. It’s important to explore framework-specific best practices for locator or selector strategies. For example, Playwright best practices recommend using locators and user-facing attributes.

To make your test framework resilient to DOM changes, avoid relying on the DOM structure directly. Instead, use locators that are resistant to DOM modifications:

page.getByRole(‘button’, { name: ‘submit’ });

Different frameworks may have their own guidelines for building element locating strategies, so it’s beneficial to consult the tool-specific documentation for best practices.

Hard-coded values.

Hard-coded values might be dangerous to automation framework flexibility and maintainability in the future. There are a few questions you might ask while reviewing:

- Can we use data models to verify data types at runtime? Consider implementing data models to validate data types during execution, ensuring robustness and reducing errors.

- Should this variable be a shared constant? Evaluate if the value is used in multiple places and would benefit from being defined as a constant for easier maintenance.

- Should we pass this parameter as an environment variable or external input? This approach can significantly improve configurability and adaptability.

- Can we extract this value directly from the API interface? Investigate if the value can be dynamically retrieved from the API, reducing the need for hard-coding and improving reliability.

Is the code properly abstracted and structured?

As test automation code tends to grow rapidly, it is important to ensure that common code is properly abstracted and reusable by other tests. Data structures, page objects and API utilities should be separated and organized in the right way.

But don’t overuse abstraction and tolerate little duplication in favour of readability.

Code Comments

Code comments should not duplicate information the code can provide. Comments should provide context and rationale that the code alone cannot. Additionally, functions and classes should follow a self-explanatory naming convention, making their purpose clear without needing additional comments.

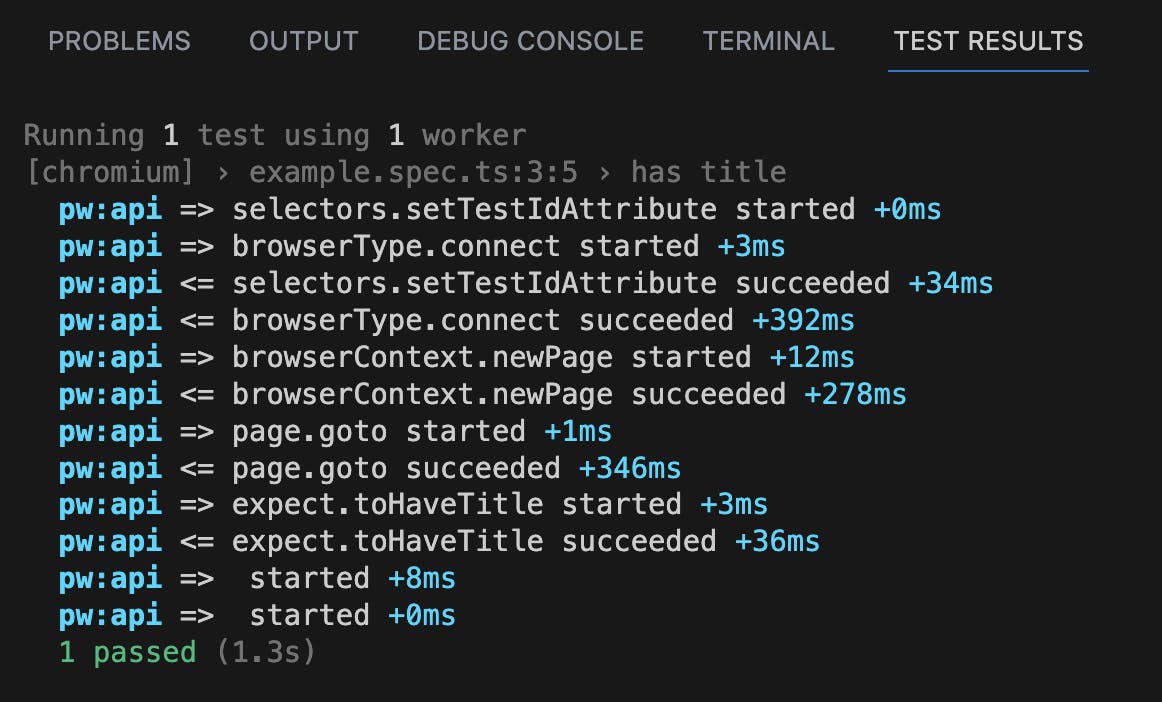

“Trust, but verify.”

Don’t rely on an automated test until you’ve seen it fail. If you can’t modify the test to produce a failure, it might not be testing what you intend. Additionally, be wary of unstable test cases that intermittently pass or fail. Such tests need to be improved, fixed, or removed altogether to ensure reliability.

Communication is the key.

Navigating the human aspects of code reviews can be as challenging as the technical ones. Here are some strategies that have worked for me when reviewing code.

- I often engage with the code by asking clarifying questions. For example:

- “How does this method work?”

- “If this requirement changes, what else would need to be updated?”

- “How could we make this more maintainable?”

- Praise the good! Notice when people did something well and praise them for it. Positive feedback from peers is highly motivating.

- Focus on the code, not the person. It’s important to frame discussions around the code itself rather than the person who wrote it. This helps reduce defensiveness and keeps the focus on improving the code quality.

- Discuss detailed points in-person. Sometimes, a significant change is easier to discuss face-to-face rather than in written comments. If a discussion is becoming lengthy or complex, I’ll often suggest continuing it in person.

- Explain your reasoning. When suggesting changes, it’s helpful to explain why you think the change is necessary and ask if there might be a better alternative. Providing context can prevent suggestions from seeming nit-picky.

Conclusion

This is not an exhaustive list of considerations for code reviews. For more guidance, I recommend checking out articles by Andrew Knight and Angie Jones. Their insights can provide additional strategies to enhance your code review process.