Introduction

Just a decade ago, leveraging the power of AI models required significant investments of time, resources, and expertise. Developing and deploying AI models necessitated extensive training and dedicated infrastructure, often requiring businesses to hire specialized experts for development and maintenance. This process was cumbersome and inaccessible to many businesses. However, with the latest advancements of Large Language Models (LLMs), the landscape has dramatically shifted. And now we are starting to benefit from what is commonly referred to as the “Democratization of AI.”

“Democratization of artificial intelligence means making AI available for all. In other words, open-source datasets and tools developed by companies like Microsoft and Google – which demand less knowledge of AI from the user – are made available so that anyone can build innovative AI software. This has led to the rise of ‘citizen data scientists’.“

— The Ultimate Guide to Democratization in Artificial Intelligence

Therefore, human resources personnel and support can leverage AI capabilities to compile comprehensive responses in a few minutes. While social media professionals can generate engaging announcements with help of a couple of simple prompts. Testing and development are not an exception. Testing, a critical aspect of product quality assurance, benefits immensely from AI-powered tools like GenAI. What sets GenAI apart is its ability to summarize, analyze, and generate information in a manner that enhances testing efficiency and effectiveness. Testers can leverage LLMs to accelerate testing procedures, conduct more thorough assessments, and ensure continuous improvement in product quality.

What are the Large Language Models?

How can individuals with limited experience in building and utilizing AI best approach understanding its principles and practical applications? Luckily, there is a Computerphile video “AI Language Models & Transformers” explaining fundamental principles on how LLM works:

In this video, Rob Miles illustrates the concept by using an example of typing on a smartphone keyboard. As you type, the keyboard suggests words based on the beginning of the sentence, updating its suggestions as you select options. This simple analogy mirrors how LLMs operate, by leveraging the probability to predict the next word based on extensive training on vast datasets.

If you’d like to learn more about LLM and how it’s been trained from nutshell, check out this article by Tim Lee, a journalist with a master’s degree in computer science, and Sean Trott, a cognitive scientist at the University of California, San Diego: Large language models, explained with a minimum of math and jargon

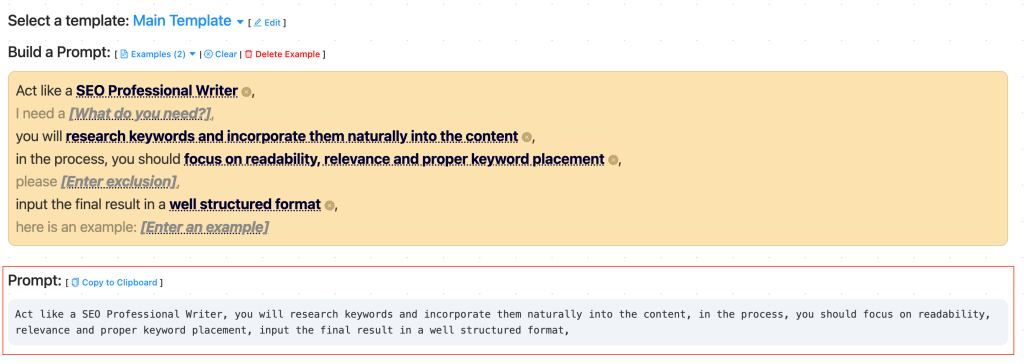

Given that LLMs operate on probabilities, achieving the desired outcomes often requires adjusting our communication methods which may differ from normal human interaction. This is where prompt engineering comes into play. It contains a pile of pattern collections with the techniques used to execute against models. While I won’t delve deeply into this topic in this article, I do want to highlight a recent template developed by Dimitar Dimitrov. This resource, accessible at LLM Prompting, can be particularly valuable for beginners looking to construct prompts that extract optimal results.

What LLMs can do?

- Generative Capabilities

Generative AI refers to the ability to produce original natural language output. Large Language Models (LLMs) advance at generating new content based on their models and provided prompts. However, it’s essential to understand that the generation process relies on probabilistic models. Additionally, LLMs may lack context and specificity regarding specific features or products. Therefore, providing adequate information and instructions for data output is crucial.

- Transformation Capabilities

Leveraging advanced algorithms, LLMs can efficiently convert data structures from one form to another. For example, they demonstrate proficiency in transitioning between tools such as Selenium to Cypress or Selenium to Playwright, as well as facilitating the conversion of code from Python to Javascript.

- Enhancing Capabilities

LLMs enable us to enhance and enrich existing information through various means. In April 2023 Similarweb, a market competition analysis company, reported that Stack Overflow’s traffic in the preceding month had dropped by 14%. CoPilot utilizes the same LLM model as ChatGPT, proficient in interpreting and generating human and programming languages. So, with a plugin integrated into VSCode developers can delegate the implementation of entire functions to CoPilot instead of searching for them on Stack Overflow. Source: Stack Overflow is ChatGPT Casualty: Traffic Down 14% in March.

Moreover, ChatGPT becomes a thoughtful pairing with an advanced version of “rubber duck” starting from analyzing ideas, to analyzing code and solving problems related to code.

How can we leverage AI in testing?

- Formulate test ideas

Risk Identification and Test Idea Generation: Relying only on LLM-generated output to define testing decisions should be avoided. Instead, LLMs can serve as valuable tools for suggesting test ideas and identifying potential risks. These suggestions can then be used as starting points for further exploration or integrated into existing testing frameworks.

Broadened Analysis: LLMs contribute to expanding analysis endeavors such as risk assessment and shift-left testing. By feeding them existing analysis data, LLMs can offer insights and suggest new ideas for incorporation into our analysis frameworks, enriching the overall assessment process.

- Test Cases Implementation

Code Snippets: While expecting LLMs to generate complete automated tests or frameworks may yield limited value, leveraging them to generate smaller components such as code snippets can be highly advantageous. These snippets can support testing activities like exploratory testing, enhancing efficiency and effectiveness.

Code Conversion: LLMs advanced in converting functions, classes, and other code components into various iterations. Their value lies in their capacity to retain the logic and flow of the original code while translating it into different languages.

Descriptive Annotations: Similar to code review, LLMs assist in enhancing code descriptiveness, enabling the rapid creation and maintenance of code comments. This proves invaluable in automated testing scenarios where clear communication of automation logic is vital for maintenance purposes.

Examples:

- ZeroStep https://github.com/zerostep-ai/zerostep: makes it easier to write test cases with Playwright.

- Postbot – AI-powered Postman Assistant: https://beththetester.wordpress.com/2023/06/12/5-ways-postmans-ai-postbot-can-help-your-testing/

- Visual testing with Applitools: https://applitools.com/

- CoPilot: https://copilot.microsoft.com/

- Generate test data and prepare test environments

Test Data Generation: LLMs, when equipped with explicit rules, can easily generate sets of data suitable for a variety of testing purposes.

Data Transformation: Leveraging LLMs for data transformation improves testing processes significantly. For instance, LLMs can flawlessly convert plain-text test data into SQL statements or translate SQL statements into helper functions utilized in test automation.

- Report Generation and Issues Reporting:

Summarizing Notes: Although not a direct data conversion, LLMs can simultaneously transform and summarize information. They can extract raw testing notes from activities like exploratory or shift-left testing sessions and compile a summary for the development or management team.

- Test Maintenance:

Automated Test Maintenance: AI-driven automation frameworks can monitor test execution results and automatically update test cases or scripts based on changes in application behavior or requirements. This helps ensure that tests remain relevant and effective as the software evolves over time.

Examples:

- Testim.io: a cloud-based platform that empowers testers with efficient test case authoring, maintenance, and execution without the need for extensive coding expertise. It allows better test cases categorization. One of Testim.io’s most significant advantages is its embedded self-healing mechanism.

Numerous companies (including Google, Facebook and Microsoft) are already leveraging LLM to speed up and improve their automated testing procedures. I recently came across an article highlighting real-world examples that caught my attention: Enhancing Test Coverage with AI: Unleashing the Power of Automated Test Generation.

Trust, but verify

Russian Proverb

While LLMs hold significant potential, it’s crucial not to blindly rely on their abilities. LLMs operate based on probabilities, which differ from human reasoning, underscoring the importance of skepticism in evaluating their outputs. Given the fact that LLM’s hallucination can be very convincing, blindly trusting LLMs can easily compromise the quality of testing. Thus, it’s essential to remember that humans, not LLMs, are ultimately responsible for problem-solving, critical thinking, and taking decisions effortlessly.

AI + Humans

And in conclusion, in one of the latest episodes of TestGuild featuring Tariq King, Chief Executive Officer and Head of Test IO, a profound insight was shared:

Tariq emphasized the importance of bringing humans in the loop to ensure AI systems remain aligned with their intended objectives, thereby preventing potential harm and mitigating bias.

“AI should be something that we see as good, it helps us grow, it helps us automate and become more efficient and so on and so forth. The only way that you can actually make sure that AI serves that purpose for humans is to have humans in the loop throughout the process. Meaning, humans involved in AI development, whether it could be curation test data, whether that be mitigating unwanted bias .. You need humans in the loop to review and make sure that these systems are not deviating away from something that would be very useful into something that’s either not useful or even potentially harmful.”

- Tariq King, Chief Executive Officer and Head of Test IO

Resources:

- AI-Assisted Testing by Mark Winteringham https://www.manning.com/books/ai-assisted-testing

- GenAI for Testers Course: https://www.thetesttribe.com/courses/generative-ai-software-testing/

- Prompt Engineering Guide: https://www.promptingguide.ai/

- ChatGPT Prompt Engineering for Developers: https://www.deeplearning.ai/short-courses/chatgpt-prompt-engineering-for-developers/

Leave a comment